Data/AI buyers skim proposals on their phones. Lead with two specifics from the post, propose a tiny paid first milestone with Done = … acceptance criteria, attach one relevant artifact (notebook, metric chart, or Loom), and offer a simple next step. This playbook gives you a reusable upwork data science proposal template, lane-specific variants (analytics, data engineering, ML/NLP/CV, MLOps, LLM/RAG), and a crisp structure for a credible upwork ai proposal or machine learning upwork proposal that gets replies fast.

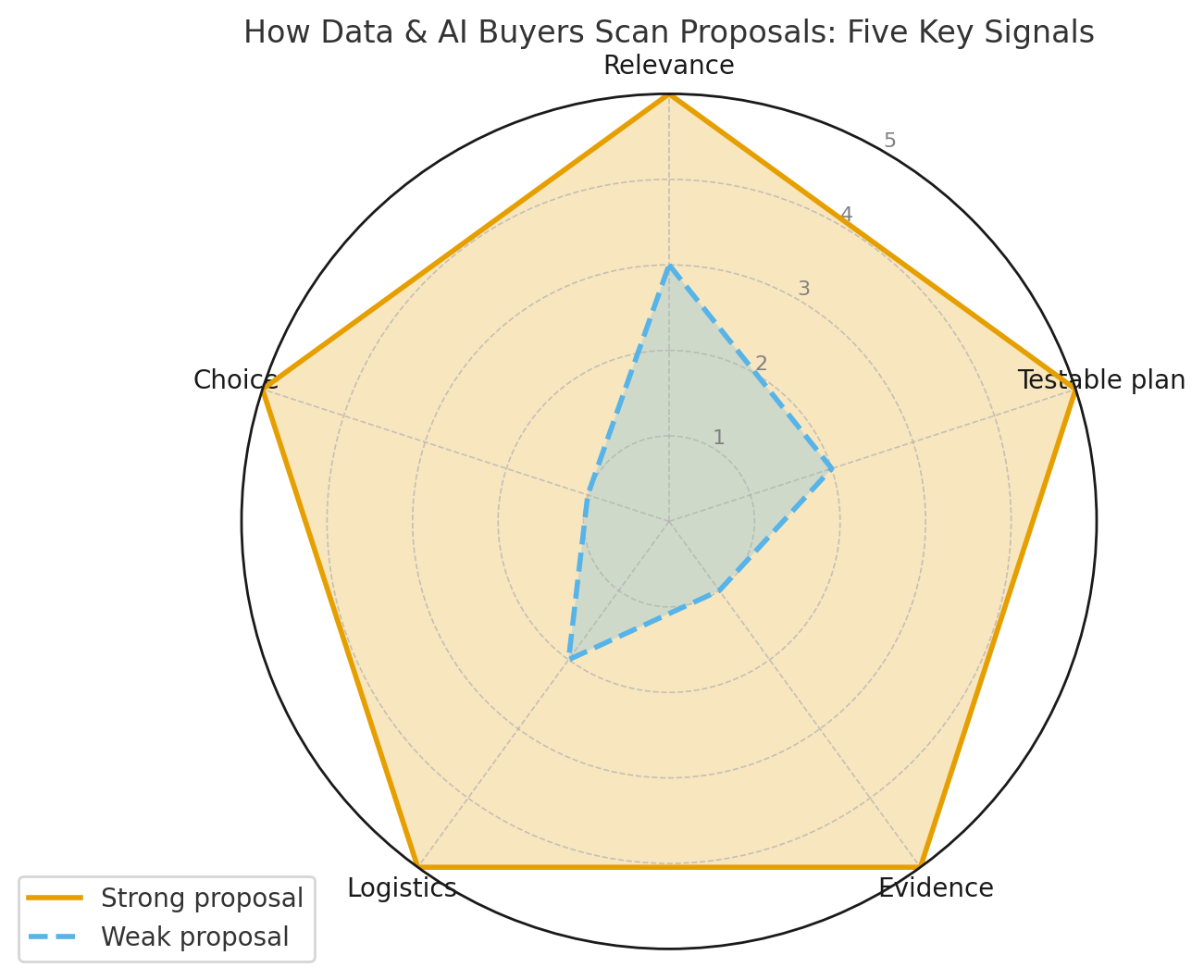

How data & AI buyers actually read proposals

Busy PMs, founders, and heads of data are scanning for five signals:

- Relevance in line 1–2: their problem, data shape, stack (e.g., dbt, BigQuery, Airflow, SageMaker, Vertex AI).

- A small, testable plan: a milestone they can approve this week.

- Evidence: one metric + one artifact tied to similar work.

- Logistics: access needs, security posture, time-zone overlap.

- A choice: quick call vs async 2-slide plan so replying is easy.

Everything below is engineered to hit those five needs in ~200 words up top, then expand briefly.

The Core Upwork Data Science Proposal Template (copy + personalize)

Use this as your default upwork data science proposal template. Replace {{braces}} with details from the job.

Subject: Practical plan for {{project}} — decision-ready in {{timeframe}}

Opener (two specifics): “Two details stood out: {{data source/shape}} and {{metric/KPI/constraint}}. Here’s a low-risk first milestone to de-risk your AI work.”

Micro-plan (3 bullets):

- Align on goals and Done = {{acceptance_criteria}}; confirm access (data sample, schema, credentials).

- Build a {{notebook/DBT model/prototype}} to test {{approach}} on {{X}} rows; log results ({{metric}}) with baseline.

- Share a 1-page decision memo + Loom; agree next steps.

Proof (1 item): “Recent: {{result}} for a {{industry}} dataset (artifact: {{notebook link/metric chart}}).”

Logistics & trust: “I’m in {{timezone}} with {{overlap}} overlap; tools: Python/SQL, Pandas, scikit-learn, dbt, Airflow, MLflow, {{cloud}}. Light PII handling—scoped, encrypted, least-privilege.”

CTA: “Prefer a 10-minute fit call today, or I can send a 2-slide plan in a few hours—your pick.”

Short, specific, testable. Then expand with scope options.

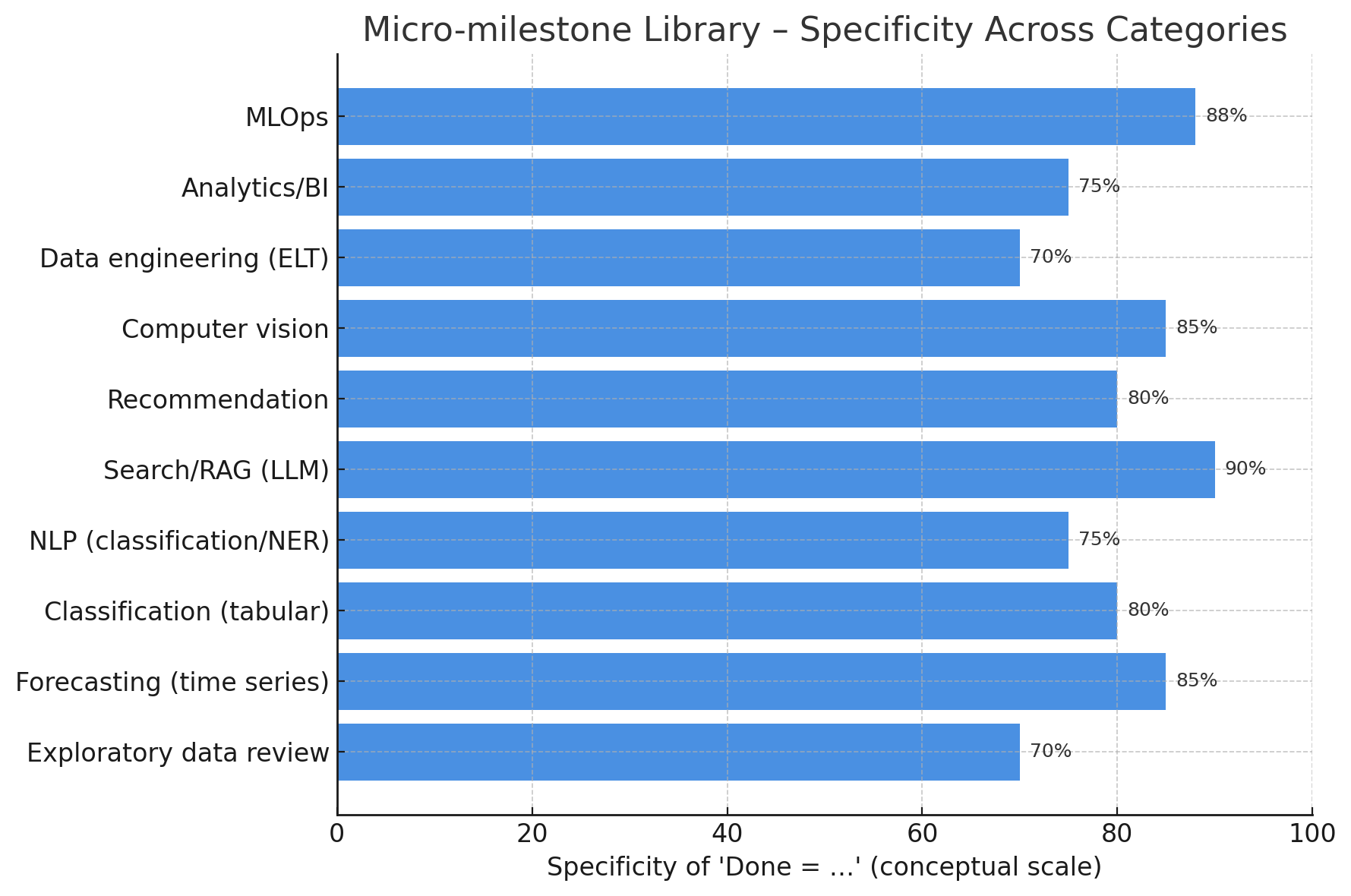

Micro-milestone library (useful “Done = …” lines for data & AI)

Buyers love concrete acceptance criteria. Borrow and tailor these:

- Exploratory data review: Done = schema profile (types, nulls, uniques), 10 data risks, and a baseline metric/heuristic benchmark.

- Forecasting (time series): Done = rolling forecast for {{N}} SKUs with cross-validation; MAE ≤ {{threshold}} vs baseline; holiday feature tested.

- Classification (tabular): Done = stratified CV with F1 ≥ {{target}} and calibration plot; SHAP summary for top 10 features.

- NLP (classification/NER): Done = tokenization pipeline + model with macro-F1 ≥ {{target}} on holdout; label quality check and confusion matrix.

- Search/RAG (LLM): Done = retrieval index + prompt chain; answer accuracy ≥ {{threshold}} on {{N}} golden questions; latency < {{ms}} p95.

- Recommendation: Done = offline NDCG@k ≥ {{target}}; cold-start strategy documented; online A/B plan outlined.

- Computer vision (detection): Done = mAP@0.5 ≥ {{target}} on holdout; augmentation plan; inference latency < {{ms}} on target device.

- Data engineering (ELT): Done = incremental debt models + tests; Airflow DAG runs green; data freshness SLA documented.

- Analytics/BI: Done = Looker/Power BI dashboard with KPI logic documented; refresh schedule and row-level security set.

- MLOps: Done = experiment tracking (MLflow) + model registry; CI/CD pipeline for training/inference; rollback plan.

Put Done = … in the client’s language to reduce scope fights and speed approvals.

Proof that gets clicked (not ignored)

Replace portfolio dumps with one sharp artifact:

- Notebook (read-only): minimal repro with clear headings and final metrics.

- Metric chart: before/after AUROC/F1/MAE; one sentence of context.

- Looker/Power BI view: filtered to a relevant segment.

- Architecture sketch: simple diagram of ingestion → storage → transform → serve.

- Loom (60–90s): walk the result and decision you’d recommend next.

One artifact + one result beats five random links every time in an upwork ai proposal.

Want to see how this works in practice? Check out how a data analytics agency earned thousands and turned profitable with Gigradar.

Sub-category variants (plug-and-play middles)

Start with the core template and swap the middle to fit the lane.

1) Analytics & BI (KPI clarity)

- Plan: define KPI logic → build semantic layer/dbt models → publish dashboard.

- Proof: “Reduced report time; stakeholders aligned on shared definitions (screenshot).”

- Done = dashboard with calculated KPIs, filters, and owner docs.

2) Data engineering (ELT/warehouse)

- Plan: sources → staging → marts; tests for freshness/uniqueness; Airflow schedule.

- Proof: “ELT stabilized; late data incidents down (graph).”

- Done = dbt models + tests, DAG deployed, lineage documented.

3) Classic ML (tabular)

- Plan: baseline → feature set → cross-val; track with MLflow; fairness check.

- Proof: “F1 ↑ vs baseline; lift chart attached.”

- Done = pipeline notebook + artifact with metrics, feature importances, and calibration.

4) NLP (classification/NER/summarization)

- Plan: label review → tokenizer + model (spaCy/transformers) → eval set; hallucination guardrails if generative.

- Proof: “Macro-F1 ↑; error analysis shows gains in minority class.”

- Done = confusion matrix, error breakdown, and prompt/label schema docs.

5) Computer vision

- Plan: data curation → augmentation → model (Detectron/YOLO) → export; latency test.

- Proof: “mAP improved; robust to lighting variation (demo).”

- Done = mAP report, test images, and inference script meeting p95 latency.

6) LLM apps & RAG

- Plan: retrieval index (FAISS/Weaviate) → prompt chain → eval set (golden Q&A) → guardrails.

- Proof: “Answer accuracy ≥ threshold; fallback prompts reduced failures.”

- Done = evaluation notebook, 20 Q&A gold set, latency/accuracy table.

7) Forecasting

- Plan: decomposition → exogenous features → rolling CV; backtest stability.

- Proof: “MAE ↓ vs naive; seasonality captured (plot).”

- Done = forecast notebook + range bands; alert thresholds proposed.

8) MLOps & deployment

- Plan: containerize → CI pipeline → model registry → canary deploy; monitoring.

- Proof: “Rollbacks <5 min; drift alerts live (dashboard).”

- Done = CI config, registry entries, and runbook with SLOs.

Each variant makes your machine learning upwork proposal feel category-native instead of generic.

Metrics & success criteria buyers expect

Anchor proposals in measurable outcomes. Cite one primary metric and tie it to business impact.

- Classification: F1, AUROC, precision@k, calibration (Brier).

- Regression/forecast: MAE/MAPE/RMSE, coverage of prediction intervals.

- Ranking/recs: NDCG@k, Recall@k, MAP.

- LLM/RAG: exact-match / semantic similarity on golden set, groundedness rate, latency p95.

- Data/ELT: freshness SLA, test pass rate, failed job MTTR.

- Analytics: time-to-insight, adoption (active viewers), decision cadence.

Add one sentence on why that metric matters (e.g., “macro-F1 protects minority classes”).

Pricing & scope options that reduce friction

Don’t lead with a giant number in your opener. After the hook, offer options:

- A) Discovery micro-sprint (fixed): data profile + baseline + acceptance criteria + next-step plan.

- B) Implementation milestone (time-boxed): ship the agreed slice with Done = … and artifacts.

- C) Phase plan (range): full scope after A/B validates assumptions (timeline, risk, staffing).

Options calm sticker shock and show you’re outcome-driven—ideal for an upwork ai proposal across org sizes.

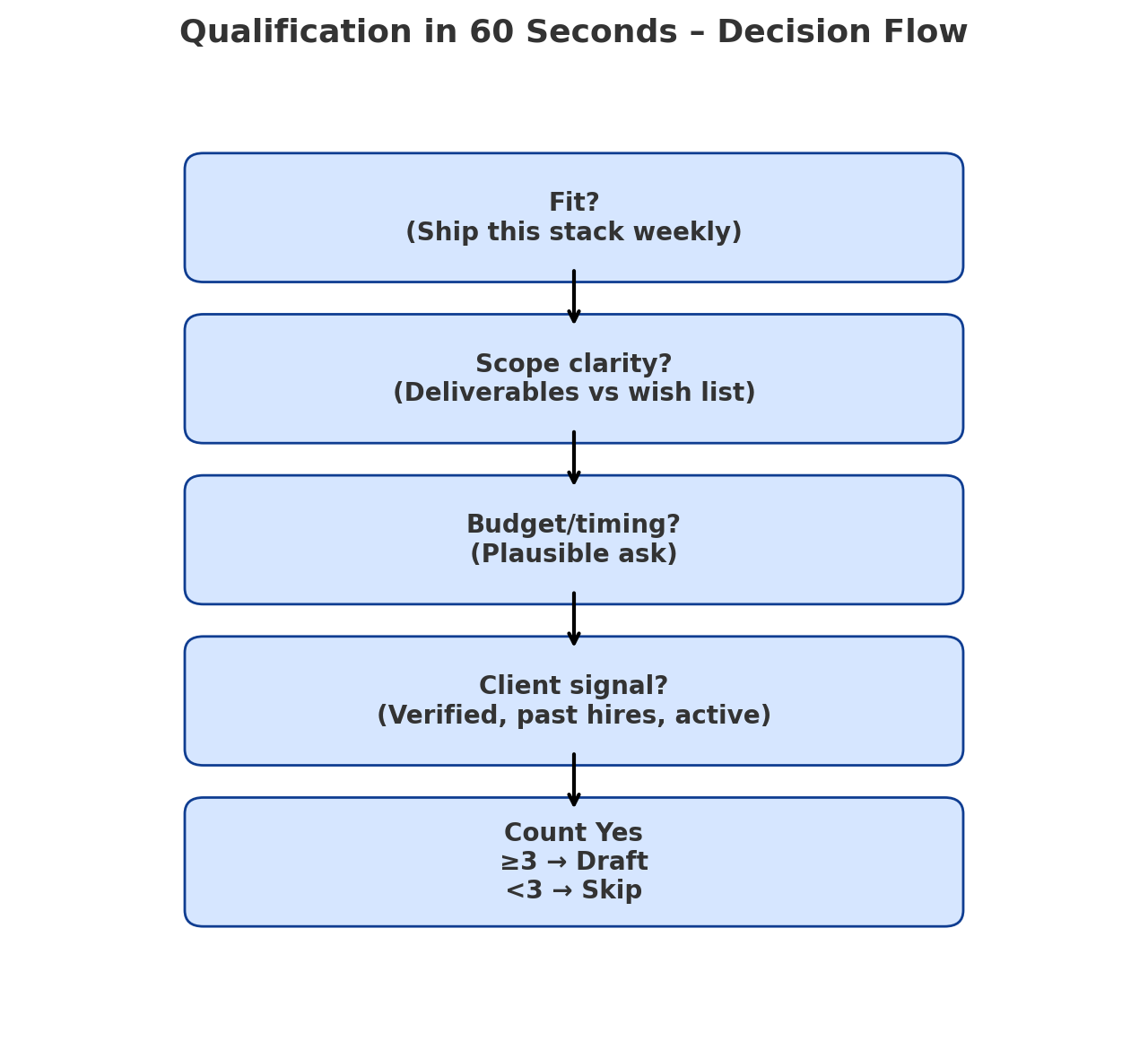

Qualification in 60 seconds (so you only write to fit)

Before drafting, run a quick triage:

- Fit: Do you ship this stack/problem weekly?

- Scope clarity: Deliverables vs wish list? Any red flags (free spec, policy dodges)?

- Budget/timing: Plausible for the task and your calendar?

- Client signal: Payment verified, past hires, recent activity, reasonable tone?

Three “yes” answers → draft. Fewer → clarify or skip. Focus is half the win.

Governance, privacy, and ethics (quiet signals buyers notice)

Add one line that shows you build responsibly:

- PII: minimize fields, use masked samples, encrypt at rest/in transit, least-privilege access.

- Bias & fairness: monitor subgroup metrics; document trade-offs.

- Explainability: SHAP/feature attributions; model cards.

- Safety for LLMs: grounded retrieval, refusal policy, prompt leak guards.

- Compliance: GDPR awareness, data retention policy, offboarding checklist.

Trust levers raise reply and hire odds without adding fluff.

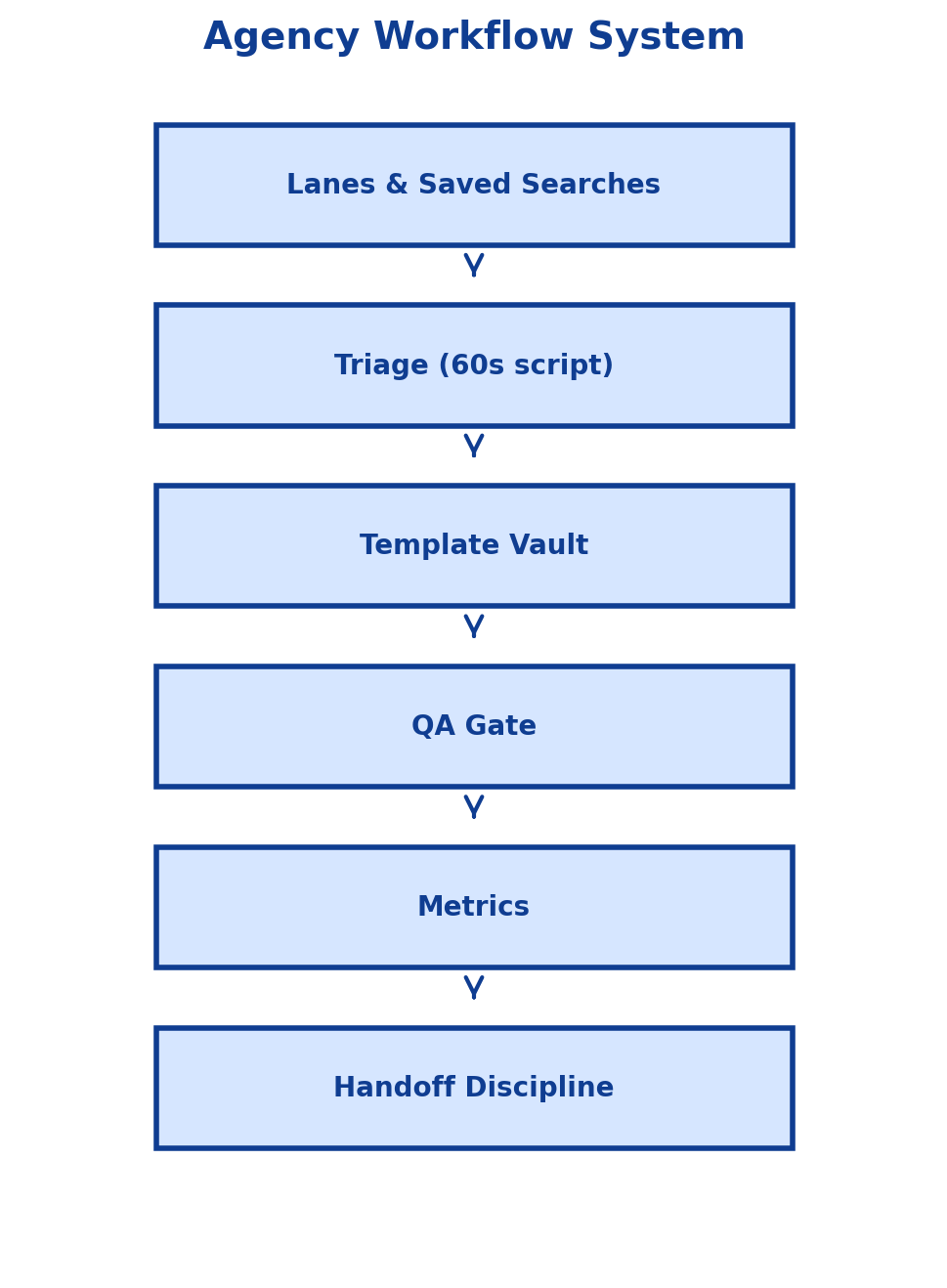

Agency section: turn craftsmanship into a system

To scale an upwork ai proposal operation without chaos:

- Lanes & saved searches: analytics, data engineering, tabular ML, NLP/CV, LLM/RAG, MLOps.

- Triage: PM/VA applies the 60-second script; only P1 jobs get drafted now.

- Template vault: openers by lane, “Done = …” library, artifact deck (notebooks, charts, Looms).

- QA gate: no send without two specifics, one micro-milestone, one proof, and a choice-based CTA.

- Metrics: reply/interview/win rate per lane; prune low performers monthly.

- Handoff discipline: access list, secret management, repo and environment setup, runbooks.

This makes every machine learning upwork proposal consistent and fast, no matter who drafts it.

Common mistakes (and fast fixes)

- Generic intros: If your opener fits any job, it fits no job.

Fix: reference two specifics in sentence one. - No acceptance criteria: “We’ll start” isn’t a plan.

Fix: Done = … in the client’s words. - Portfolio dumps: Ten links repel.

Fix: one artifact + one metric. - Metric vagueness: “High accuracy” means nothing.

Fix: name metric, baseline, and target (with tolerance). - Ignoring data quality: Models can’t fix missing/dirty data.

Fix: include a data profile step. - Skipping guardrails:

Fix: add a line on privacy, fairness, or grounded answers for LLMs.

And while you avoid proposal mistakes, don’t forget about platform risks too. We recently broke down the most common Upwork scams and how to spot them.

Proposal QA checklist (run before you hit send)

- Two explicit details from the post in line 1–2.

- Micro-milestone includes Done = … stated in client language.

- One proof with a clickable artifact (notebook/chart/Loom).

- Metric(s) named with baseline/target or acceptance band.

- Access & security needs noted (sample data, creds, least-privilege).

- Time-zone/overlap stated + simple CTA (call vs async plan).

- The opening section is phone-friendly (~150–220 words).

If any box is unchecked, it’s not ready.

12 opener lines you can steal (then personalize)

- “Because you’re on BigQuery + dbt, I’ll ship a mart + tests and publish a KPI-clean dashboard this week.”

- “Your churn model needs calibrated probabilities; I’ll baseline and deliver SHAP with a lift chart.”

- “If forecast error spikes on promotions, we’ll add event flags and cross-validate with holidays.”

- “For NER on messy text, I’ll start with a label audit and macro-F1 target to protect minority classes.”

- “Your RAG stack needs grounded answers; I’ll build a golden set and validate accuracy + latency.”

- “Let’s stabilize ELT: incremental models, freshness tests, and Airflow retries so dashboards stop lying.”

- “Because stakeholders debate KPIs, I’ll define logic once in dbt and mirror it in BI.”

- “We can cut inference latency with batch pre-compute and a small on-device model where needed.”

- “For recommendations, I’ll benchmark offline NDCG and propose an online A/B plan.”

- “Your CV pipeline struggles in low light; I’ll augment data and test mAP lifts.”

- “Analytics is noisy; I’ll align GA4 events with SQL so product questions are answerable in minutes.”

- “We’ll ship a model registry + CI so promotions are tracked, reproducible, and reversible.”

Paste, customize, ship.

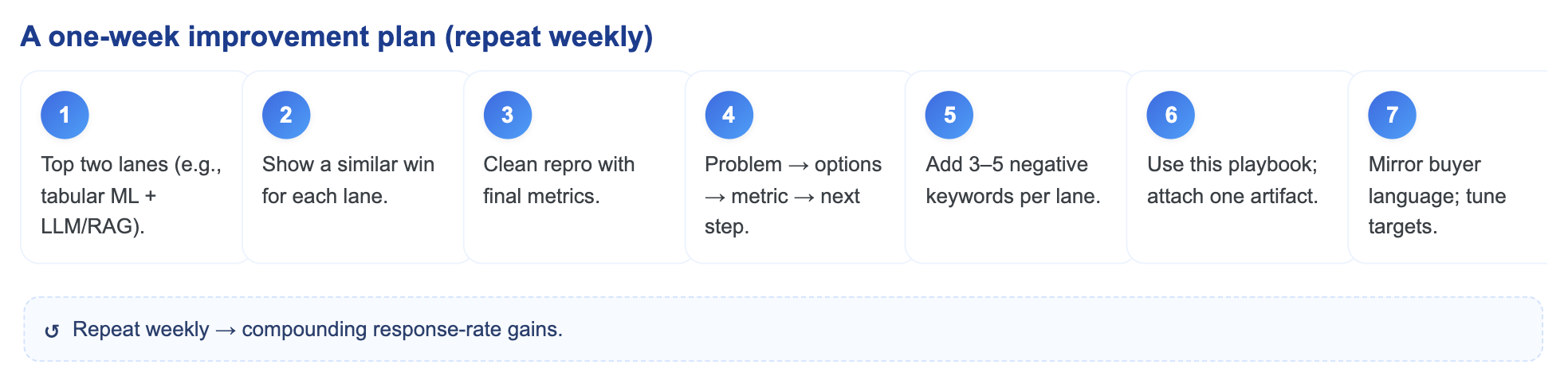

A one-week improvement plan (that compounds)

- Day 1: Draft “Done = …” lines for your top two lanes (e.g., tabular ML + LLM/RAG).

- Day 2: Record a 60–90s Loom per lane walking a similar win.

- Day 3: Prepare one clean, read-only notebook per lane with final metrics.

- Day 4: Build a 1-page decision memo template (problem → options → metric → next step).

- Day 5: Clean saved searches; add 3–5 negative keywords per lane.

- Day 6: Send two proposals using this playbook; attach exactly one artifact each.

- Day 7: Review replies; refine “Done = …” wording and metric targets to mirror buyer language.

Repeat weekly. Your response rate will rise without adding hours.

Final thoughts

Great proposals for data & AI aren’t long—they’re specific. Anchor your upwork ai proposal in the client’s data, KPI, and stack, propose a micro-milestone with “Done = …” in their words, and back it with one relevant artifact. Wrap that discipline into a reusable upwork data science proposal template, and every machine learning upwork proposal you send will feel like a safe, obvious “yes.” Keep promises small and testable, communicate clearly, protect data, and let consistent delivery turn more bids into interviews—and interviews into shipped, measured, trustworthy AI.

.webp)

.webp)